Turning Downtime into Discovery: Asking Better Questions in Forensic Mapping

- Earl Bakke

- 5 days ago

- 2 min read

Sometimes, life forces you to hit the pause button. Recently, a fall and subsequent neck surgery left me with some unexpected downtime. Rather than just resting, I decided to use this time to dive deep into a problem that has always bothered me in forensic search operations: Why do we still rely on our eyes when the data can see so much more?

I want to be clear—I am by no means an expert in photogrammetry. I’m just a very curious person looking for more tools to put in the toolbox for missing person cases. I believe that everything is discoverable if you just ask the right questions.

My question was simple: If we can't see the color difference, can we see the shape difference?

The Experiment I ran a blind test in a wooded area, simulating a "hasty concealment" scenario (a body covered by debris).

The Setup: A target located 63 feet from the roadway—a classic "drag distance" for roadside disposal.

The Visual Check: I looked at the standard drone photo (RGB). It was a chaotic mess of grey sticks and deadfall. Honestly? I would have walked right past it.

The "What If" Workflow: I stopped using the default settings and tweaked the math to hunt for volume instead of pictures.

"Mild" Filtering: I forced the software to keep the "noise" (sticks/leaves) rather than smoothing it out.

The "Body Filter": I tightened the ground classification to 0.5 feet, ensuring that low-profile objects wouldn't be erased as "ground."

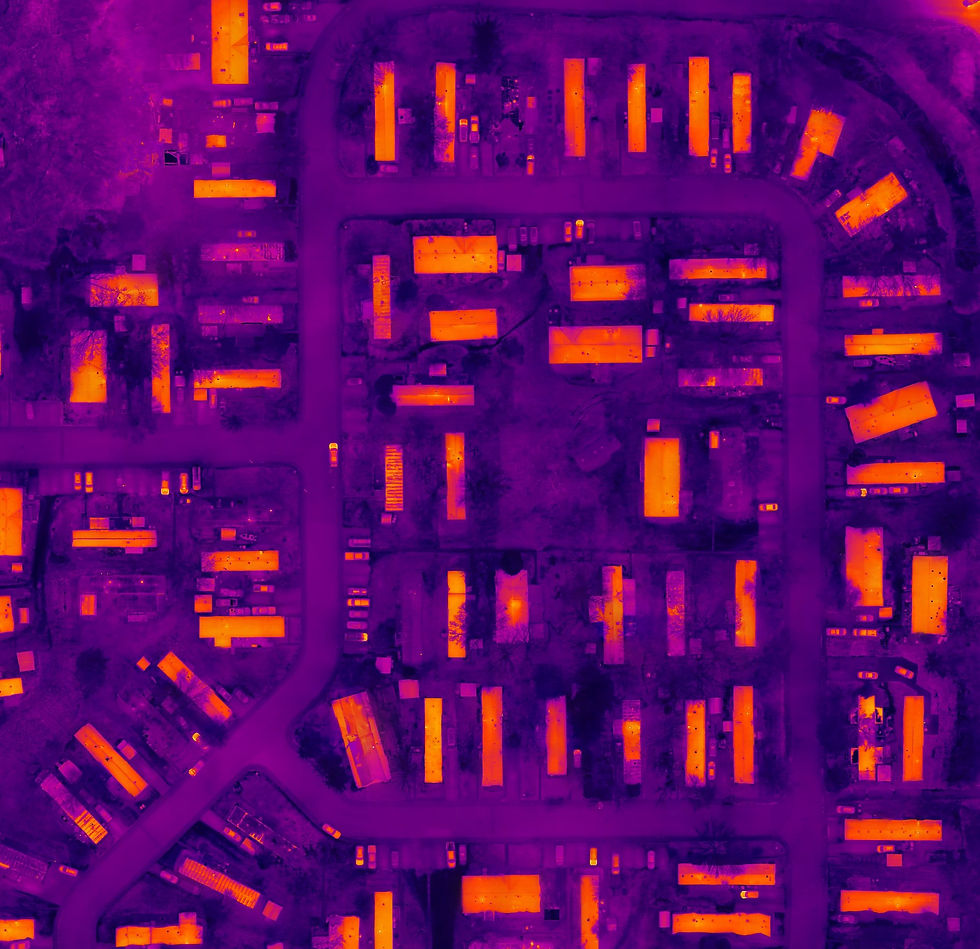

The "Traffic Light" Map: I applied a custom color palette. Green was safe ground. Red was anything rising more than 12 inches above the dirt.

The "Aha!" Moment When I applied the filter, the grey mess of sticks didn't just appear—it glowed. The software flagged a distinct 15-square-foot Red Ring, an exact geometric match for a human-sized concealment site. It found the volume where the eye missed the contrast.

The Bigger Picture While LiDAR remains the heavy hitter for penetrating dense canopy, this test proved that standard photogrammetry—when processed correctly—can be an incredibly powerful tool in our kit.

And this is just the start. As we begin integrating more advanced software and AI into these cases, our ability to find the lost will only get faster and more accurate.

The Takeaway Recovery has given me the time to learn, and I’m eager to get back in the field to deploy this. We have the technology to make the invisible visible; we just have to be curious enough to ask the data the right questions.

If you are in public safety or SAR and want to geek out on photogrammetry settings, feel free to reach out. I’ve got plenty of time to chat!

Comments